Performance Automation Testing

Why do Performance Automation Testing?

Advantages of Performance Automation Testing With Us?

Industries We Work With

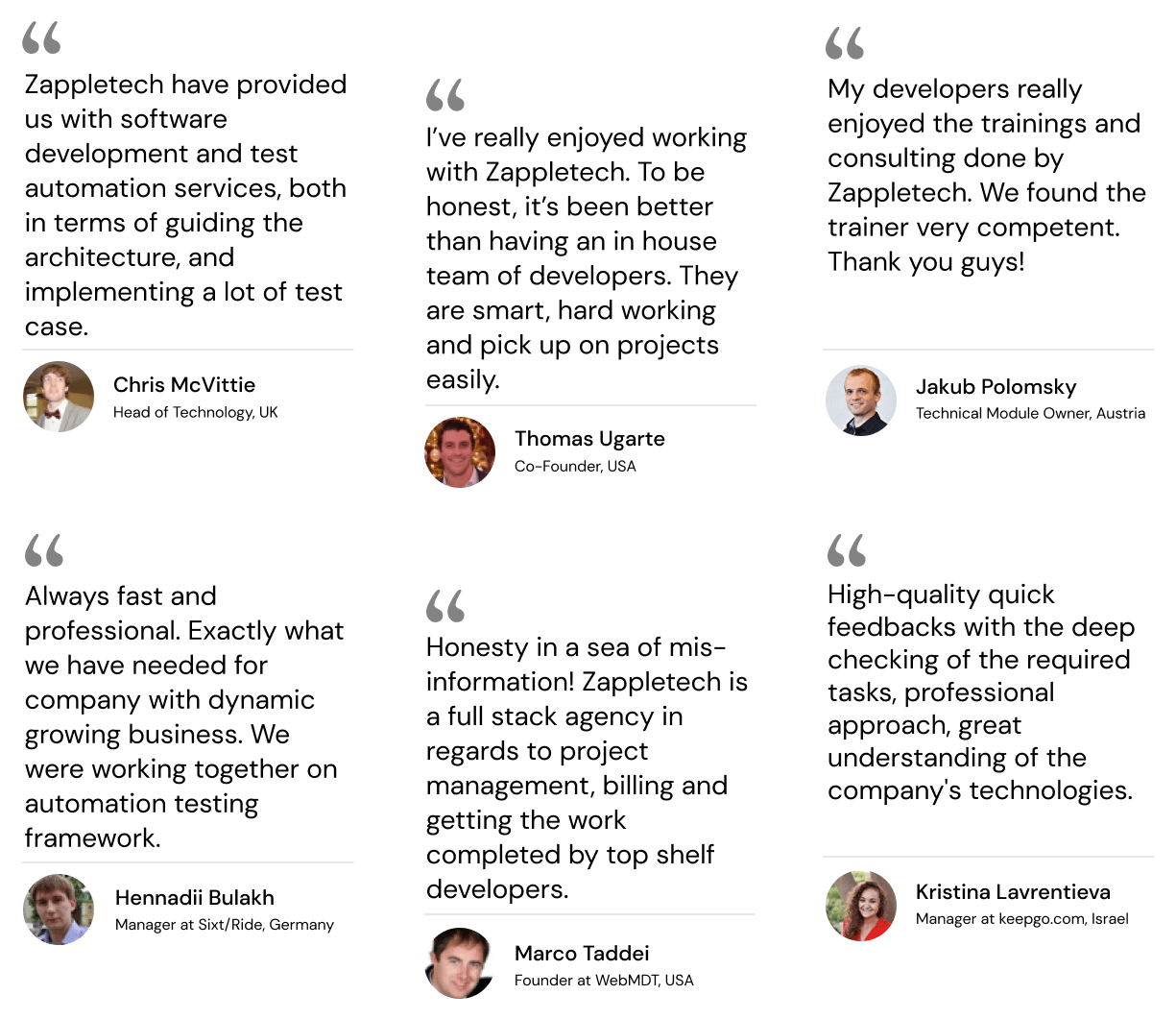

What Our Customers Say

Performance Automation Testing Services We Offer

Our Expertise

Web App Testing Tools and Technologies

Start Cooperation Now!

Why Should You Choose Automated Performance Testing Services?

Performance testing is pivotal in the software development lifecycle, ensuring that applications meet performance expectations under various conditions. It evaluates responsiveness, scalability, and stability to guarantee optimal user experience. As software systems become increasingly complex and user demands evolve, the need for robust performance testing methodologies becomes more pronounced.

In response to this growing demand, automated performance testing have emerged as a game-changer in the realm of software testing. These services leverage automation tools and techniques to streamline the performance testing process, offering unparalleled efficiency and accuracy. In this article, we will delve into the significance of performance testing, explore the rise of automated performance testing services, and examine their transformative impact on software development practices.

The Need for Performance Testing

In today’s hyper-competitive digital landscape, software application performance can make or break a business. Users expect seamless and responsive experiences when accessing a website, mobile app, or enterprise software solution. Failure to meet these expectations can result in user dissatisfaction, lost revenue, and damage to brand reputation.

Traditional performance testing methodologies, often reliant on manual processes and limited scalability, struggle to keep pace with the demands of modern software development. Manual performance testing is time-consuming, labor-intensive, and prone to human error. Moreover, it fails to provide the comprehensive coverage and rapid feedback necessary to promptly identify and address performance issues.

Enter automated performance testing services, offering a paradigm shift in how performance testing is conducted. By harnessing the power of automation, these services enable organizations to execute performance tests quickly, efficiently, and with high precision. Performance test automation empowers teams to easily and accurately simulate real-world scenarios, such as heavy user loads or fluctuating network conditions.

Automated performance services leverage scripting, virtualization, and monitoring tools to automate the testing process, from test design and execution to result analysis and reporting. This accelerates the testing cycle and enhances test coverage and reliability. As a result, organizations can uncover performance bottlenecks early in the development lifecycle, reducing the risk of costly rework and post-deployment failures.

In the following sections, we will delve deeper into the core principles of automated testing services, explore their benefits over traditional testing approaches, and discuss key considerations for implementation. Additionally, we will examine real-world case studies highlighting the transformative impact of automated testing on organizations across various industries. Finally, we will explore future trends and challenges in the field of performance testing automation, paving the way for continued innovation and improvement.

Understanding Automated Performance Testing Services

Automated performance testing available as a service is a highly compounded solution specially designed to deliberately identify software application system parameters. These services show the values of economy, relatedness, and repeatability since they are the base of these ideas.

Performance opposite automated services, in this case, using tools and test frameworks that help the execution of the tests be fast and accurate. Such platforms are capable of making repetitive tasks like test case creation, data preparation, and results analysis available, making available time-critical resources and valuable time that can enable the teams to focus on more strategic aspects of software development.

Repetition of operations in test execution results in the lack of errors and ensures their consistency and reliability. Automated test performance services allow the possibility of creating a test scenario just once. In return, each execution will be the same and repeatable, which also means that the subsequent test results will be consistent. Such consistency is very important for detecting slight deviations from the performance baseline and tracking wider trends.

Scalability stands out as a second principle defining auto-performance testing services. These services take care of the scaling up and down to match the volume of tests run through different artifacts, platforms, and user syndicates. This includes if the performance testing only cover simple web applications or complex performance testing with distributed systems; they can be adapted to the varying requirements of the software under test.

Overview of Key Features and Functionalities

Test Scripting | Automated performance testing services provide intuitive interfaces or scripting languages for defining test scenarios, specifying user actions, and configuring test parameters. |

Test Execution | Automated performance services can execute complex test scenarios across multiple environments, browsers, and devices with the click of a button, ensuring broad coverage and accurate results. |

Load Generation | These services simulate realistic user loads by generating virtual users or injecting synthetic traffic into the application under test. This enables teams to assess the application’s response to varying levels of concurrent user activity. |

Monitoring and Analysis | Automated performance services offer robust monitoring and analysis capabilities, allowing teams to track key performance metrics in real time, identify performance bottlenecks, and generate comprehensive reports for stakeholders. |

Integration | These services seamlessly integrate with existing development and testing tools, such as continuous integration/continuous deployment (CI/CD) pipelines, version control systems, and issue tracking platforms, facilitating smooth collaboration and workflow automation. |

Comparison with Manual Performance Testing Methods

The performance evaluation approach based on strictly manual methods has been the traditional way to go, but do not have what it takes to face the efficiency and effectiveness of automatic performance testing services.

Manual testing is laborious and time-consuming as humans touch the process, from each test execution to delivering the results. In this case, efforts are being made manually so that the scalability of tests is also limited, and repeatability of these tests is just near impossible. This makes any comprehensive test coverage even more difficult and further delays the feedback possible.

Conversely, the automated performance testing services can automate tasks, both recurring and time-consuming, without the need to wait for the results, and provide insights into the performance of your application based on the results. Through automation, it becomes possible for such services to eliminate human error, shorten the time needed for testing, and provide the teams with an opportunity to achieve an early fix for the performance issues in the developing process.

We also have most solutions teamed up with the ability to super-scale, enabling teams to simulate thousands or even millions of users with ease. Such scalability is crucial for assessing the reaction, responsiveness, and stability of web applications to abrupt and unexpected environment changes in highly dynamic environments.

Briefly, computerized testing services are a big progress regarding the manual testing method as they are fully evidence-based and error-free, unlike the manual testing method, which is prone to errors. Adopting automation is a great way for companies to speed up software deliveries, increase product quality, and bring the best customer experience possible.

Benefits of Automated Performance Testing Services

High-end servicing systems for automated performance testing eliminate human error and generate results faster and more accurately than traditional manual testing techniques. Functional automated services enable test engineers to eliminate repetitive tasks and benefit from specialized testing instruments that reduce the time taken to perform performance tests and deliver reliable outcomes.

Automatic performance testing is another of aspect which speeds up the test execution. Manual testing courses require tediously executing test cases one by one. In contrast, automated testing services can run hundreds of scenarios simultaneously, using distributed test environments and virtual user simulation. It speeds up the test execution process and allows the unveiling of the performance problems at early development stages, reducing the opportunity of delayed releases and subsequent faults after deployment.

However, automatic performance checkup systems help with faster error localization and fixing performance problems. These services can react immediately to application performance issues, seeing the health of every component through monitoring real-time metrics that show which piece of code or database query is to blame. These detailed insights help developers identify performance problems promptly and implement appropriate solutions one by one. The output will be a more responsive and scalable app.

Besides the fact that automated testing services are a key to sustainability through improving efficiency and accuracy, they may also offer cost-effectiveness and scalability for businesses of all sizes. In general, setting up and running a full-fledged performance testing infrastructure used to be very costly, implying the need to purchase expensive hardware, software licenses, and highly qualified personnel. However, by using automated performance testing services, companies can capitalize on cloud-based testing platforms and pay-per-use business models where their costs can be reduced and scale up the environment at any moment. It is the widely accessible tools of performance testing that are leveling the field so that organizations, regardless of their sizes, can now implement supreme testing standards without incurring massive costs.

In summary, automated performance services offer many benefits, including improved efficiency and accuracy, faster identification and resolution of performance bottlenecks, cost-effectiveness, scalability, and real-time monitoring and reporting capabilities. By embracing automation, organizations can improve performance testing practices, enhance product quality, and deliver exceptional user experiences in today’s competitive marketplace.

Implementation Considerations

When selecting an automated performance service, several factors must be carefully considered to ensure its suitability for the organization’s needs and objectives.

First and foremost, organizations must assess the capabilities and features offered by different performance testing services. Not all performance testing tools are created equal, and it’s essential to evaluate whether a particular service aligns with the organization’s testing requirements. This includes considering factors such as the types of performance tests supported (e.g., load testing, stress testing, endurance testing), scripting and scripting language support, and compatibility with the technologies and platforms used in the organization’s software stack.

Integration with existing development and testing processes is another critical consideration. A seamless integration between the automated performance testing service and other tools and systems used in the software development lifecycle (SDLC) is essential for maximizing efficiency and productivity. This includes integration with version control systems, continuous integration/continuous deployment (CI/CD) pipelines, issue tracking platforms, and project management tools. The ability to automate test execution as part of the CI/CD pipeline ensures that performance testing becomes an integral and automated part of the development process, providing rapid feedback to developers and stakeholders.

The testing infrastructure’s scalability and flexibility are also paramount. As software applications continue to grow in complexity and scale, the performance testing infrastructure must scale dynamically to accommodate testing needs. Automated performance services should offer the flexibility to scale up or down based on demand, leveraging cloud-based resources and distributed testing architectures. This ensures that performance tests can simulate realistic user loads and scenarios, regardless of the application’s size or complexity.

Additionally, organizations must ensure that the automated performance testing service complies with industry standards and regulations. Depending on the industry and the nature of the application being tested, specific regulatory requirements and compliance standards may need to be adhered to. For example, applications handling sensitive customer data may need to comply with data protection regulations such as GDPR or HIPAA. Therefore, choosing a performance testing service that provides features and functionalities to support compliance with these standards, such as data anonymization, encryption, and audit trails, is essential.

Future Trends and Challenges

Emerging trends in automated performance testing:

- AI-driven testing: As artificial intelligence (AI) and machine learning (ML) technologies continue to advance, automated performance testing services will incorporate AI-driven algorithms to optimize test scenarios, predict performance bottlenecks, and analyze test results more intelligently. AI-driven testing will enable automated tools to adapt dynamically to changing application architectures and user behaviors, leading to more accurate and efficient performance testing.

- Continuous performance monitoring: Organizations increasingly adopt continuous monitoring solutions to detect and address performance issues in real time. Continuous performance monitoring integrates seamlessly with automated testing frameworks, providing ongoing visibility into application performance metrics and enabling proactive performance optimization. This trend towards continuous monitoring ensures that performance testing becomes an integral part of the development and deployment process rather than an isolated activity performed at specific milestones.

Potential challenges and limitations of automated performance testing services:

- Complexity of modern applications: Modern software applications are becoming increasingly complex, with distributed architectures, microservices, and cloud-native technologies. Automated performance testing may struggle to keep pace with the evolving complexity of these applications, leading to challenges in accurately simulating real-world usage scenarios and identifying performance bottlenecks across interconnected components.

- Security and compliance: Ensuring the security and compliance of automated performance testing tools and processes presents a significant challenge, particularly in regulated industries such as healthcare and finance. If not implemented and configured properly, automated testing tools may inadvertently expose sensitive data or violate regulatory requirements. Moreover, ensuring the security of test environments and test data becomes more challenging in cloud-based and multi-tenant testing environments.

Strategies for overcoming these challenges and maximizing the benefits of automation:

- Invest in comprehensive training and skill development: To address the complexity of modern applications and ensure the effective use of automated performance testing tools, organizations should invest in training and skill development for their testing teams. Training programs should cover not only the technical aspects of automated testing tools but also best practices in performance testing methodologies, test design, and result analysis.

- Implement robust security and compliance measures: Organizations should implement robust security and compliance measures to mitigate the risks associated with automated testing. This includes implementing data encryption, access controls, and audit trails to protect sensitive data and ensure compliance with industry standards and regulations such as GDPR, HIPAA, and PCI DSS.

- Embrace a shift-left approach to testing: By shifting performance testing left in the software development lifecycle, organizations can detect and address performance issues earlier in the development process when they are less costly and time-consuming. Implementing automated testing as part of the continuous integration and continuous delivery (CI/CD) pipeline enables teams to identify performance regressions early and ensure that performance requirements are met before deployment.

- Collaborate cross-functionally: Effective collaboration between development, testing, operations, and business stakeholders is essential for maximizing the benefits of automated performance testing. By breaking down silos and fostering cross-functional collaboration, organizations can ensure that performance testing aligns with business objectives, accelerates time-to-market, and delivers value to end-users.

In summary, while automated performance testing services offer significant benefits in terms of efficiency, accuracy, and scalability, they also present challenges related to the complexity of modern applications, security and compliance requirements, and organizational readiness. By embracing emerging trends, addressing potential challenges, and implementing strategies for success, organizations can harness the full potential of automated performance testing and deliver high-quality software products that meet the demands of today’s digital economy.

At this end of the performance test service journey, it may be clearly seen that automation of performance tests is not merely a luxury anymore but a must in the context of modern software development processes. These changes are customer-centric, and they are induced when the user is either an end-user personifying a consumer application or an internal stakeholder using enterprise software.

Automated performance testing services make it stress-free for the organization to visualize applications running correctly in standard conditions and by creating congestion and high performance conditions. Developers are enabled by automating repetitive tasks and utilizing testing frameworks that improve overall quality by providing flexibility, shorter testing cycles, and better product quality.

The impact of the AI model on end users in terms of efficiency gains is also evident. Identifying and resolving the performance issues swiftly guarantees less time to waste connecting and working with applications as bottlenecks are gone. The real-time monitoring and reporting provided by these systems can pinpoint the form and severity of some performance problems; as a result, they are solved before they statistically lead to downtime or trailing performance.

Flexibility and scalability, key features of automated performance services, allow organizations to deal with changing user demands and business requirements as easily as possible. Whether auto-scaling to handle significant user traffic, micro-optimizing for new features, or functionally automated performance testing gives organizations a new level of performance in today’s highly dynamic business environment.

However, the journey towards implementing automated performance testing has its challenges. Organizations must navigate factors such as integration with existing development and testing processes, scalability of testing infrastructure, and compliance with industry standards and regulations. Moreover, the cultural shift towards embracing automation requires stakeholders’ buy-in and a commitment to ongoing learning and optimization.

In general, algorithmic performance testing shows great potential for companies that choose this method as part of their ingenuity to keep up with their competitors’ high standards and customers’ requirements. Users should put first to get the most out of automation to manage testing processes and improve performance results. Thus, organizations will find better ways to manage the changes that are so important in today’s digital-driven world.

Frequently Asked Questions:

What is performance testing and its types?

The goal of performance testing is to discover an application’s breaking point. Endurance testing ensures that the program can withstand the predicted load for an extended length of time. Spike testing examines the software’s response to big spikes in user-generated load.

Why is performance testing important?

Performance testing can be used to assess a variety of success elements, including response times and potential errors. You may confidently detect bottlenecks, defects, and blunders with these performance results in hand, and decide how to optimize your application to eliminate the problem.

When should we do performance testing?

The first load tests should be performed by the quality assurance team as soon as several web pages are functional. Performance testing should be part of the daily testing regimen for each build of the product from that point forward.

What is KPI in performance testing?

Key Performance Indicators, or KPIs, are measures that allow us to measure our performance and success based on factors we deem relevant and vital. KPIs are used by organizations to assess themselves and their actions.